2023 marked an inflection point in AI usage and adoption; today, it seems like every tech company needs to have a position on AI!

In this rush toward an AI revolution, consumers are increasingly savvy about the ways in which AI can help and can harm. In fact, there have already been multiple reports of harm from AI – from bias against the LGBTQ+ community to sexism, racism, and more.

In response, industry groups composed of leading tech founders and investors have already come together to propose guidelines for responsible use of AI – here’s one example from Responsible Innovation Labs.

In this talk, we’ll discuss:

Our belief is that the companies that win this AI revolution will be the ones that build AI products in responsible, ethical ways – because it’s good for all of us.

About the speaker

Pedro Silva is a Manager of ML Engineering on the Inclusive AI team at Pinterest, where he focuses on the development and adoption of ML techniques that prioritize diversity, fairness, and equitability. Pedro is passionate about bridging the gap between theory and practice for ML applications and holds an unwavering commitment to ethical AI development.

See below for:

You can view the slides from the talk here - and see below for the full recording

In the Q&A, Pedro mentioned providing some papers he found interesting on the topic, here they are:

Lauren Peate 0:03

So welcome to the tech leader chats. Our goal, as many of you know, with this community is to create a place where human centric tech leaders can learn and grow together. And this is also community aware at its heart, we care deeply about diversity, equity and inclusion. And so this topic is very near and dear to our hearts, because we want to be building tech that helps people and that doesn't harm people. My name is Laura, and I'm the CEO and founder multitudes. And as a company, our goal is also around making tech equitable and inclusive. So lots of reasons that we really care about this topic. The very briefly, the plan for today is that we'll start with hearing from Pedro, this part will be recorded, so he'll share some content He's prepared, we'll have time for a group q&a At the end of that. And then at about that midway point, we'll then shift gears, we'll turn off the recording, and then we'll give you some time to break out into a group discussion. And the goal of this is twofold. One, you can have maybe some more candid discussions than you can during the part where it's getting recorded. But also you can meet some other people who are leaders like you who care about this, and are wanting to grow and improve in this area. Along the way, you can put your questions in the chat, James will be monitoring that he'll batch them at the end. And we'll ask those to Pedro. And

Pedro Silva 2:50

Thanks, Lauren. That's a very, very kind introduction. Give me one second here. Let me start sharing my screen.

James Dong 25:08

Awesome. Thank you so much, Pedro. That was really, really tactical, it was so helpful, because there's so much real world advice that we got from that. So I encourage folks, if you have any questions, please go ahead and put them into the chat. And then I will be a little bit of a moderator. Just to kick it off, I want to start with a question. And Rebecca, it looks like you're here. So feel free to add more context to this question if you want. But this question is, how do you balance the need for getting informed consent from users with the potential for information overload? Rebecca, feel free to share more context or, or it's fine?

Lauren Peate 0:00

That's just let.

that is, that's how the session will work. So diving in, then, I wanted to share a little bit about how I met Pedro. And interestingly, I actually met him when he joined our tech leader chat Slack community. And in the little intro, he mentioned that his job title was leading the inclusive AI team. And so that immediately caught my interest. And it kicked off a Slack conversation, which turned into a face to face conversation. And it was all just so interesting, and frankly, so relevant, especially for this period that we're going through now with the explosion of AI, that we had to have Pedro come and speak to this community. So really, really excited that he's going to be sharing. As you can see, he's the manager of the ML engineering team on inclusive AI team at Pinterest. And he is passionate about bridging the gap between theory and practice for machine learning applications, and also holds an unwavering commitment to ethical AI development. And he I've seen that in practice, he has a really strong focus on who is it that might be negatively affected by this thing that we're building. And I know in tech, it's so easy for us to get excited about the cool applications. So we need those people to remind us to pause and reflect. And also I'll say this, because I don't know if Pedro would say this about himself. But he is he's the person it sounds like that, that people at Pinterest go to for tips on how to use AI responsibly. And so we're so so lucky that he's sharing his time and his insights with us today. And with that, I'm going to stop and hand it over to him. So thank you so much, Pedro. And I'll stop sharing.

Pedro Silva 3:01

Cool. All right. Can you all see my screen? Fantastic. All right. So hi, everyone, if we haven't had the pleasure to meet yet, I'm Pedro. And like Lauren said earlier, and because of our team at Pinterest, which is our kind way of saying they're responsible AI team. And we really look a lot into these topics. So before we go into the content of the conversation, I do have two disclaimers, I need to say. The first one is that all the views expressed in this talk are my own as a long time, you know, learner and practitioner in the field of responsible AI. But they're not necessarily representative of Pinterest views or opinions. So I'm here at my personal capacity, I just want to make that clear. The second disclaimer is that on this talk, it may include content offensive nature, with respect to gender, sex, religion, nationality, race class, in social socio economic status. And the point of that is basically to make sure we can see a little bit of the type of problems that can come out of this, I will give a warning, it's basically one slide. But I want to make sure that this is said up front. And I want to also say, Please prioritize your mental health and disconnect if the content is beyond what you can tolerate. That's perfectly fine. But I wanted to make sure we can I can, I can say that. Without that out of the way. Let's let's get into different parts here. Right. So when when, when I spoke to Lauren James will talking a little bit about how to approach this. And they really wanted me to talk through the topic of how to safely and responsibly use AI. Right. And I was like, that's going to be very challenging. So I decided to make this a little bit more self contained. And I was like, why don't we make this relevant to what's happening over the last few months? And let's just talk about Gen AI. Right. I think one of the awesome things that we've seen with Gen AI is how easy it is to bootstrap and build things really quickly and put it out there. Which is amazing like s s s I know that a lot of you work in the startup space in velocity is key, right? You really want to build products really quickly get it out there proof of concept of this kind of those, right? So I want to talk, basically, the kind of the talk is going to be around in AI. And when I say generic here, I'm saying broad terms. I'm talking about all the other different modalities. Right? So the first thing I want to I want to go into is like, what kind of training do we have out there? And it's all kinds of flavors, right? You have of course text with text chat TPT it helped copilot Claude from anthropic you have text to image that's going to be stained with diffusion Dali, you have a new GPT version is doing that right now. Text to video, there's going to be opening eyesore if you haven't seen it, I really recommend saris. Absolutely amazing. You have some of the other tools like stable video diffusion, those are not that great, but they've been out there for some time. But this is not limited to these right? You have way more you have tax to audio image to tax. And basically all of those come into like multimodality. Right? So the systems are being built today. They are the type of system that they can take an image plus description plus a prompt and they can output something else of different modalities. Right? And I think the question, I probably don't need to even go into this, but like, why should I use this, right? And there is a lot of potential good things that can come out of this, like scaling up, like, for example, scaling up support, summarization, code, code assistant, right, an actual assistant for handling calendars, and all these kind of things in the image and video space. For example, if you're a small company, this can really scale up how you do like stock imagery for products or videos and things like that. Also, just making your pictures look better, I think Adobe Photoshop has been making really good use of these tools for on their product, for example, and even like evaluating some of these problems, right? Like, how do we evaluate image quality, there's a lot of there's a lot of good, good uses for this. So that's the positive piece of it. But there's also a lot of bad things that can come out of it. And this is the warning for the next slide is going to contain a few pictures that may be offensive to some folks. But it's basically the heart of this conversation. Like why should we care about responsibility in this space? Right? I think maybe I'm preaching to the choir to this group. But I think it's still valuable for us to, to have that understanding. Right. So this is just one paper of probably 50 that have come out over the last two years or so about like, here's a lot of bad things that come out of these models, right? So for example, on the on the top left here, I have this is basically the prompt and what the image generator generated, right? So when you say like an attractive person, it's all fair and skinned people do it, folks, right? So it's like, do you have this concept that that has to be associated with being attractive. Similarly, a poor person, it's always black and dark skinned people, right. And even if you go at the middle one at the bottom here, a poor white person is still generating black people. So you can see how you have this bias baked into the models. Another really bad example, for example, if you see a house that looks like a typical western American kind of house style, right, so like, you're not even encompassing other cultures, like even Europe, and other places are very different. But as soon as you see an African house, you see things that look like a lot more like broken down poor HUD kind of style. So that already has a lot of a lot of these things baked in. And this is again, just image generation, which is one of the ones that have really gotten a lot of usage before, there are the problems here. Like as soon as you say for example, in this paper specifically, they also look at gender, right? She's doing here in the middle, if you say give me an image of a software engineer, it was like 99% white men, right? So what does that mean? It's the same for like, I think pilots as well as the same thing. But as soon as you say a housekeeper is the reverse, a lot of them are people of color in most of them are going to be women or at least people that have feminine traits. So this is the kind of problems that you know we're trying to avoid like I think all of you here you would not like to have an application anything you build associated with this type of issue right? So this isn't name and space on the tax base. It's not really that different like there's probably been like 50 or different more news per week about things to happen wrong in the opening I generated and tattoo pretty sad that right? Oh, just today I saw one that the Met. The mature model was saying that it has a child with disabilities. And it's like what what are you saying what in what in the Black Mirror water and my reading right? But just I feel the problems here that you can encounter right discrimination. Hey, Speech, an exclusion. This is usually associated when you go into languages in countries beyond the US and English, right? A lot of these models do support multi language out of the box, right. So like you can actually maybe running these experiences in India or Brazil, and they seem to work just fine. But they have a lot low, a lot lower security in terms of buyers and generating this type of content. So just be mindful of that information hazards. This has one, this has become a big problem as well, you know, a lot of applications defeat user data into the model, in the prompt part probably, to be able to generate useful things. But then if you prompt the model enough, the model can actually review some of that information that you shouldn't have. Right. So that is something to keep in mind. This information harms, you know, hallucinations has become a big deal. Like the mod is literally creating things right at the end of the day. None of this is magic. This is just machine learning. Right. So these are very fancy autocomplete models, right. So it can actually generate things that are not truthful, because that's what makes the most sense, right. So it tends to have a bias towards the majority. And it also leads to like poor quality of information and misleading information. Malicious uses. There are currently a few of these models available in the dark web for creating malware and creating like a potential attacks. So in a different lifetime, I have worked in the cybersecurity space, and it has become really hot, because now the amount of new malware that's coming out of this is really, really high. And also the amount of social attacks that can be done with this, right? Literally, if you've got a recording, like if you got this recording in my voice in like five seconds, you can generate any kind of audio, that's my voice speaking in my wife cannot differentiate it, because it really reproduces very well, nowadays, so a lot of malicious usage, environmental and socio economic farms, it is no secret that only a handful of companies in the world can actually train these models, because it really takes a lot of money. And that money is being spent in like buying these chips. But also like, you know, the the creative economics is being affected here. So you have environmental impacts, because that's generating a lot of heat that's contributing to

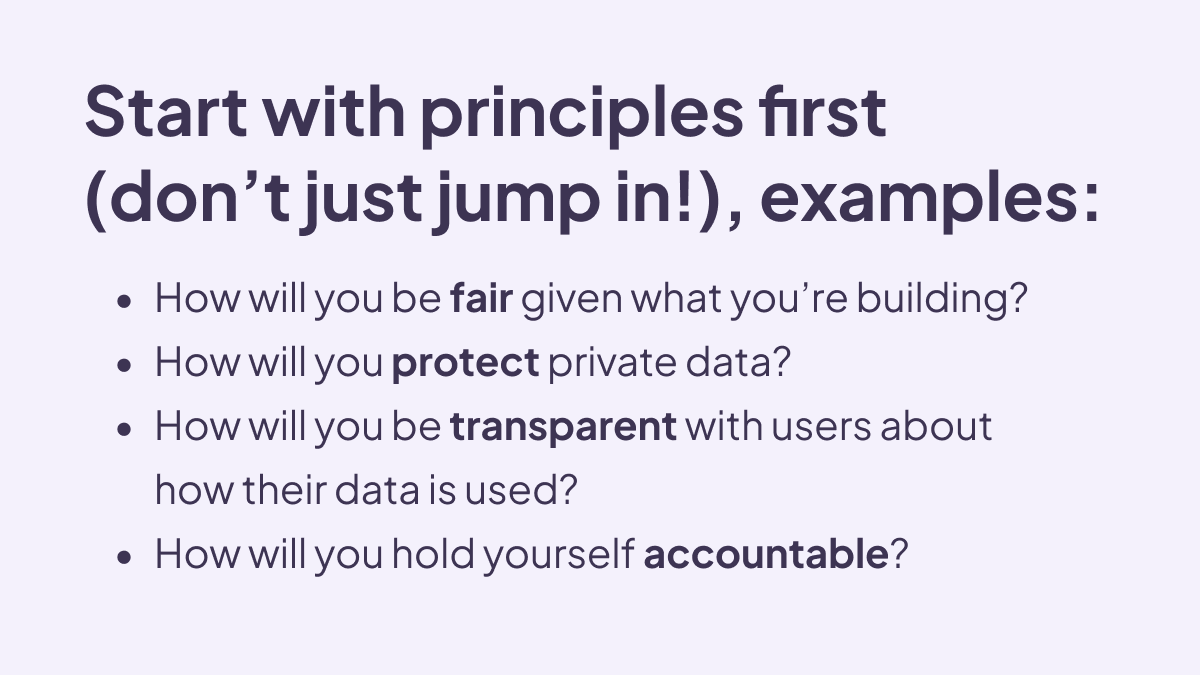

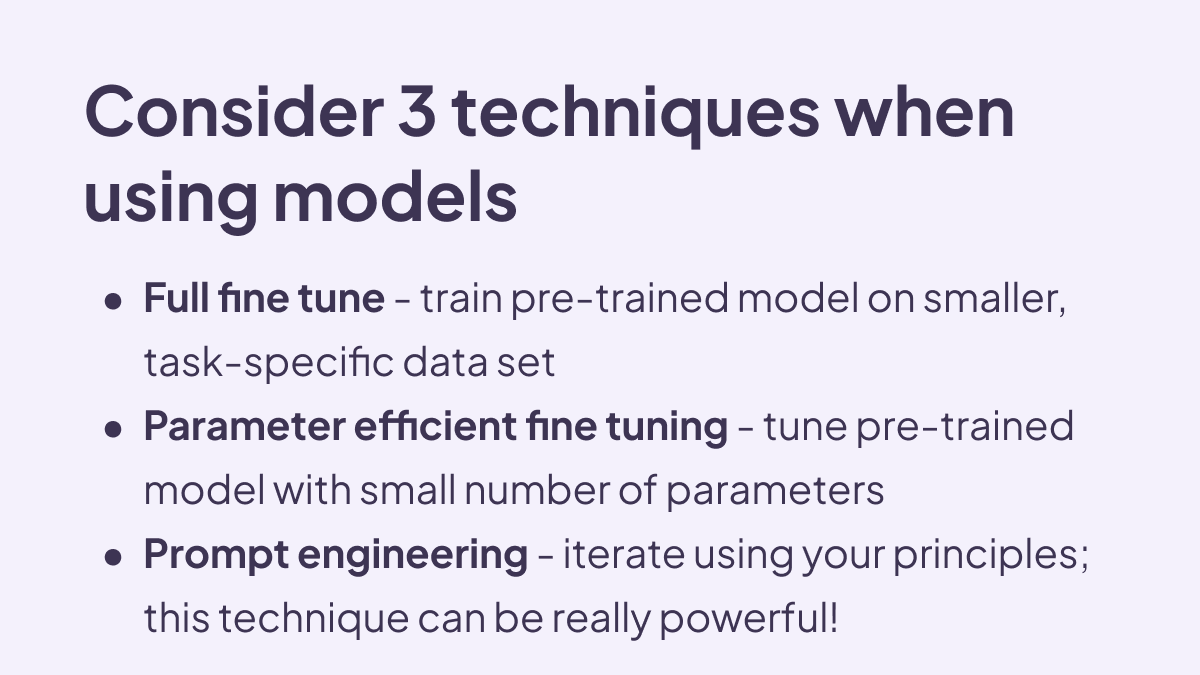

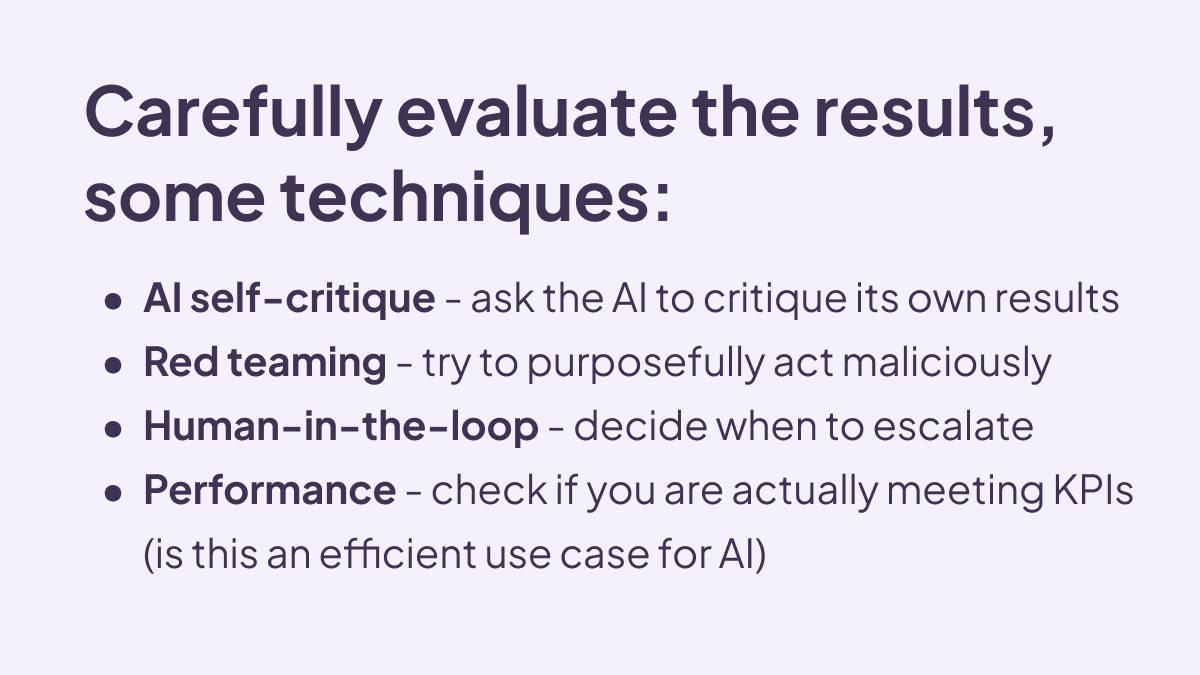

farmstay environment. And it's also creating, like, besides economy, that, you know, a lot of the creative economy is being affected, because now instead of hiring someone to do a drawing, or take a photo shoot, I can just generate design model. So there's some things to call out there. Now, okay, that's very scary. What can we do about that, right? So when you're trying to build a product, I think often we started with the vision of like, this is what I want my product to be, this is my idea. But as soon as you inject AI into the fold, especially generative AI, one of the most important things to have is principles, right? And this is something I really encourage all of you to do it before you add any of these tools, like what are your principles in the guidelines, you want these tools or anyone that comes to your company, there's going to be leading to this type of things to abide by. And I just have a few here that, you know, you probably going to cities, if you look for principles, pillars, guidelines, in any of these big, big tech companies, you're going to find that on their website does not mean they follow all of them. But you should write because again, I think we are trying to avoid this type of problems. They often don't encompass these four things here. Fairness, like what does what does it mean like fairness in machine learning? It's a very hot topic, even like what is what is fairness? Like, what what am I talking about? Usually, here we're talking about group fairness, meaning you don't want different groups to be affected and to be treated differently, right. And that just like something simple, whereas men and women or people that live in country A versus Country B, or people with disabilities, so you can always think about how can I look at different groups and try to understand how they're being impacted by this to that I'm trying to put it out there. Privacy, again, going back into what I was saying about data leaks through these models, right? There are a lot of attacks nowadays to kind of get information from from the models that you may be provided in the prompt, or in the data part of it. So there's a lot of questions into like, how can we protect protected data? To begin with, in when is the time to pull the plug on these applications if anything comes to white? Thirdly, which I think is extremely important. Transparency, right? I think there's nothing worse than having that feeling that oh, I've been duped. Right, someone took advantage of me. So if you're planning on using any of these tools, you should be upfront, right? If you're generating images with generative AI, be upfront about it like hey, this image what was generated with AI and this helps you create trust with your users, right? And it really doesn't matter the type of application that I'm talking about here, like we need to have that level of trust, like a lot of people use these now for support. And he really tried to pretend that you're talking to a human or something like that, which is like, it's bad. Like, it's really bad. It's much better to be like, Hey, I have this first layer here. But if anything happened, you got to go and actually talk to him. And, and that's the accountability, right? You have the ultimate control over things. So don't just be blaming, like, oh, yeah, that was a lie to us, there was a system that I used, like, who's making those calls? Right? Someone should be accountable. So keep that in mind. With that being said, now we get into the problem of okay, maybe I should use it. I don't know if I want to use it. But how can I actually do it? Right. And I think there is a lot of work into getting these really big models, which are called, we call them like conditional models, right. So that's like your llama model or your chat TPT, your GPT, whatever version 334. And actually making them useful to your applications right to your company to whatever task you need to do it, there's a few different ways to do it, I listed three here, which are the most popular, right? For fine tuning, that means you literally downloading a huge model, you need to load it into memory, and you train it further with your own data. It's really, it's really fun to do it. But you can think that it's also again, very computationally costly. And it's also going to take a lot of memory. And of course, it takes a lot of expertise, knowledge, right. In a similar in a similar tone, you can also do what we call is called P, F, D path, parameter efficient, fine tuning. This one takes a little bit less time, but it still requires some knowledge, right? You need to have someone this is someone that is like, probably has a lot of understanding of machine learning how to set this up, do I need to talk to AWS, now things really got out of proportion, right? And probably the third one here, which a lot of people don't think have a ton of power, but actually has its prompt engineering, right? So this is something no you can do, which is literally like being very specific about what you want out of this model. And this is where again, your principles might come in handy, right? Those principles can be part of how you determine how you write a prompt, and can be part of the instructions for this model. Be very specific on what you want. Right? Sometimes we just like, evaluate his dataset, like what does that mean? Even if you say that to a human is like what do you mean, evaluate his dataset, be specific, like evaluate his dataset, breaking down generic chart with a certain manner, I want to understand ABC, give me a summary in two paragraphs, like things like that, like, the more specific you are, the better your outcome are going to be. And one of the things to keep in mind is that a lot of these models as they have been evolving, they have been becoming very powerful on keeping context, right, we call it the context window, some of the latest models have been coming out, they have contracts, when it's up to like 250 256,000 words, that's like several books, like you can literally fit all of that information. And images are still useful when the outputs interact as needed. Like things don't have to be frozen in time. If you're trying something out, you're trying to evolve it. And after some time, it doesn't start giving you the output. You want it keep evolving on that problem, right. And then we're gonna get into probably the most important part of all of this, which is the part that I put a lot of time in, usually, when I'm working on these things, which is evaluation, right? Really like evaluate, like, your life depends on it, because oftentimes, your company lacks my dependencies if things go wrong, right. So I just have a few different ways where when we look into evaluating, evaluating generative experiences, how we go about that. So first one is context dependency, right? The biggest risk in these experiences is when they have open ended inputs and outputs, right? Meaning, literally, you open up a prompt, so the user can type anything they wanted. Maybe instead of having a prompt, you can literally have just a checkbox, where you're selecting something that you already determined, that's going to be anything, all the information you need from them. Same thing for the output, right? That can be part of your prompt saying like, hey, the output I want, it has to be one of these options. ABCDE. And that's it. So you limited you limiting your risk by limiting the input and output.

The second thing I haven't used online versus offline use cases. So offline is when I say online, here, I'm referring on generating things in real time. That means literally, you have a model that the user will give an input and that's going to be generated in real time. You have a lot less control about that because you just don't know what the model is going to. It's going to put it out. But if you can, if you're specific application, you can generate things offline and makes it much easier for you to test it out. Try it against it and make sure what you're putting out there is a little more safe. And it's also much cheaper generating things in real time is gonna cost you a lot of money, and often often comes with like latency requirements, how long is that going to take some generations, depending on what kind of thing what kind of product you're doing, they may take between like 15 seconds and a minute. And if that's a real time thing, what is your user gonna be doing during that period, right, it might literally just drop and go into more testing Jenny is, is really, that's one of my favorite things to do. Which is, you can actually use a prompt to task the output of another model, right? So let's say you have a task. And my task is like, I want you to rank the pictures of these beautiful cats in order of prettiness. You can have a different model that basically takes that output, say, like, hey, this was what I asked for the model. This is the output it gave me. Give me a critique, right. So you literally putting pitting Gennai, against any I hear that can be the same model doesn't really matter. It's a different task, right. And that can help you find a lot of issues really early on. Number four, probably one of the most important here. So number three, here, you have helps you automate a lot of this, you cannot remove people off the process, right. And Red Teaming is really fundamental for that. So Red Teaming is really, again, it came a little bit from the cybersecurity space, when you had like pentesting, you had literally people trying to break your system, hack your security, do this the same way, but into the space in the internet space, where you literally trying like after you have built experience, put yourself in the shoes of a malicious actor? How can someone try to break his experience and generate bad things and just try it yourself? Try it with your team do this now limited to specialists to people that are in mountaineers, right? This is like you can do it because maybe users will do it inadvertently. So you should just try it. Try to break experience, honestly. And number five here, humans in the loop, very fundamental. A lot of people when they talk about this, they really just wanted to click things done. No one ever looks at this again. And then a few months later, things break. Maybe if you had someone trying to look and find problems more proactively at a certain cadence, people would have found stuff, right? That's, for example, the case that happened with Google Gemini where they were generating Nazi pictures of African American people. That was a very simple thing to test. Right? But like how, how often were they doing those tests? Probably not often enough. Performance? This is where you know, is it actually performing? Is it actually doing what you want it to do better than like a human would do? Or any other traditional machine learning system? People almost forget that, like, machine learning isn't that it's not about DNA is not everything about nai anymore. There is a lot of room for other tools, too. Now, I am almost at time, I just want to close with some some quick thoughts here. You know, for better or for worse, the AI revolution is here. This is not there is no, there is no putting the toothpaste back in the tube. I think what is what we have to do as technologists is make sure that when we're using these tools, we're giving it a thought it has to be given we respect him in a way, but we need to be responsible in how we use them. Right. And that's my point number two, be responsible and accountable. Don't sacrifice quality over speed ever, there is a quickest way for products to die, right? I want to really make sure that my thing is out there tomorrow. But if you're not checking for quality, it might just have that one chance and it might die earlier than it should have. Number three here, don't over fit on JNI a lot of forums are actually going to be better solved using different tools and different AI tools even right, like literally five years ago, the the the top of the ball was deep neural nets, right, and those are still exists, they're still out there. They're still fantastic for a lot of tasks. There's so many different tools in the AI space that can be used for different tasks. So don't just use this hammer for everything. It is powerful. It is super fun to use it. I'm not gonna lie, I'm out of our favor for it. But just be mindful stand. And lastly, be proactive and be creative, different tasks, we will require different models, different approaches and different techniques for even testing, developing and evaluation, right. I'm often given like, Hey, I have two super cool projects that I want to launch tomorrow. Can you help me test it out? And they usually gonna say I will help you but not by tomorrow because I need to think about what can go wrong into this specific experience. Right. And oftentimes that is different from other things. You really have to think about that And with that, I'm going to end it here. Thank you.

All right, I can I can. Go ahead.

James Dong 25:52

You can I'll be trying to unmute herself, or there we got. Yeah,

Speaker 1 25:57

yeah. I was sorry. But um, yeah, that go ahead. Because we seem pretty, pretty limited on time, there was mainly around design research. So when you're working with people, there's just a lot to this a lot of information to take on board and really think about for people to to really make a decision. So it can feel like it slows things down.

Pedro Silva 26:21

Yeah, Rebecca? That's a really good question. I tend to, I tend to bias on the transparency side of things. You know, I think I've seen like a cool application that came out recently off, scaling up mental health via these tools, right. And I think that only works, because right at the beginning, you already know what's happening, like, Hey, I know this is not a person, but it can help them to a certain extent. So the privacy piece here is also about, you know, transparency with the users whether they know or not that what you're doing is not like it's aI generated. And understanding like, there are processes to go beyond that, like, oh, I can actually like, maybe if I don't like this experience, I can file a ticket. And that's going to get to the person who is going to read it. The Privacy piece of it is also a challenge that we always see in the, you know, I work in this inclusive AI space, where if I knew everything about my users, I could probably give them a better experience to they want to tell me everything? Probably not. Do I want to know everything? Probably not. But there is always that tension, right between privacy and personalization to a certain extent. And it's I'll be honest with you, I don't have a fleshed out answer, that's a line that I really, really walk on a per application scenario. Because, you know, sometimes you just shouldn't know about people, even if you think you would provide a better experience sometimes.

James Dong 27:44

Great, thank you. So Lauren has a question here. Can you share an example it's fine to anonymize it, of a time where someone came to you with a model they built and you help them improve it. Tell us your process for evaluating the model? And what recommendations you ended up making?

Pedro Silva 28:02

Yeah, that's a good question. Let me try to think about that. I think there was one Yeah, there was an interesting one that came to light a few months ago, where it was a project about generating some text about some pictures. And that person came to talk to me to the team. And the question was basically like, Hey, here's all the testing I've done. Can I put this? Can I actually take, do you think this is safe enough to put it out there? And it's always funny, because it's like, I don't have a stamp of like, this is great. Put it out there. Let's see how it goes. And people really want that and just know how it works. So one of the things that did was almost like a red teaming approach of like, how could this go wrong? And if the input on the scale is on the images, what kind of images could I put on it is that I would basically generate harmful things. So I'm always trying to think from talons of who would be would be the people that could be mostly negatively impact, but it is experience, right? And I'm gonna be honest with you, none of these projects and all these things that we ended up working on, no one is coming with bad intent, right? Most of the time, like all the time, people have like really good intentions. And sometimes it's actually building positive things out there. But you really need to think from the lens of like, who'd be really poorly impacted about it. So on this one specifically, we did I did find a lot of images that were generating questionable text as output. And they actually took them back into the drawing board. And they did some work on on improving some prompt engineering, actually. So it was kind of quick integration. And they've got rid of it. And they also limited a little bit of the exposure, right? A lot of times, it's like, I can just open this up into the whole, like whatever corpus you have, maybe you can start small right? Start with your MVP, start on talent and you know, it's a little bit safer and a little bit self contained. With time you can expand it as you build more capable Ladies, that's usually the process we take a lot is, in the second one that can never be forgotten. Look at your performance metrics, right? Like, what is the actual target metric you're trying to improve? This is experiencing proving that or not? And if not, why? Until I can be a myriad of answers to that, but that's the process we take.

James Dong 30:24

Great, thank you. Next question is from Scott. So most discussions around ethics are about the use of the tools. What about the ethics of the design, creation and training? Do you have any input on ways that people can and should consider those elements?

Pedro Silva 30:40

I do a little bit but very limited, I actually am fortunate to work with a really good design team, like we have, we have a few designers that literally think about inclusion in everything we build. Which really helped me like, it's fascinating. The amount of times I'm like, I haven't thought about that before. And I'm learning something new, just being in the room with a lot of these folks. So I actually had a conversation, really interesting conversation last week with someone that we had built a tool to help to help a certain part of the population that's often under served. And who hadn't built this amazing thing, and we're really proud of it. And then when we shared a little bit probably, we got a feedback like, hey, how does this work for people to have difficulty ratings, so they need to have like, have some accessibility needs? And you're like, oh, actually, maybe we haven't thought about that. Let's take a look. And it was actually a pretty poor experience, which led to good work. And like, you know, we should make this better. So often, it this these questions will go back almost to my previous answer, which is like, what are the people that maybe don't have a great experience on tos and why? Right. And again, I think this group is a really good group of people that have those thoughts into like, how these things affect minorities, how this does affect people that, you know, it doesn't like, you don't have to be my neighbor, maybe I'm building something and someone in the other side of the world is going to be using, how does that affect their experience? On this application? That's often how we how we think about it, but I'm pretty sure there wasn't a great answer, because I have really good, I have the honor of working with great designers that have thought about as much more than I have.

James Dong 32:27

Thank you. And that's that's an answer to arise, you see a fine, you're really good designers. They're also very principled about that. Yes. Another question is from Briana, how do you think the garden guardrails should be different between prototyping versus production?

Pedro Silva 32:43

Oh, that's a good one. I think um, prototyping, you have a lot more freedom for things to go already, right? Like you can, you can try a lot more things. It's like, it's kind of like sandboxing, right, you can really try a bunch of different things here, things can go wrong, and that's perfectly fine. I think the guardrails for prototyping have to be often based on your principles, right? So like, if you do, like, Why do I want to build something that's going to be basically revealing private information for my users that I shouldn't know to begin with, like, so that's, for example, something you shouldn't even do in prototyping, right? And honestly, you can actually build a lot of things. Unintentionally, when when you go into production, I think the stakes are much higher, right? Because in production, you really need to think about some cases that are really out of the box. So to me every time, if I'm building something new, that first thing I think about is how can I limit the amount of exposure to these gonna have in terms of what can cause if using to generate experiences, right? If you think about normal, like traditional machine learning models, it often comes down to data, right? Like how when you look at your data for any model your building, how balanced is it in terms of some of the sensitive attributes, again, to the extent that you can know how bounce is it, we often have the same machine learning, right, garbage in, garbage out. So if your data is bad, you cannot expect to just have a great tool, a great machine learning model out of that, right. So there is often a little bit of wiggle room you can do on the data side to make things better from the get go. Right.

James Dong 34:21

Great, thank you. And then, let's see.

Lauren Peate 34:26

I can bet you're happy with that. And so I'm making you read it. Yeah. Yeah. And I know, you know, I know how much time you spend staying up on the research around all of this. And so would love to hear you know, maybe for for those who want to do some more reading on this, what are some of your favorite papers and why? Oh,

Pedro Silva 34:44

that's a good question. I have a few different ones that have come out last year, I can probably share with you so you can you can share with the group. And a lot of them helped inform the stock on like, you know, potential harms we have and they were Are some of my favorite papers because it really helps you think outside of that box, right? A lot of these things that I that I've seen it, I didn't come up with it. I'm basically like, I really didn't like this is really interesting. And I didn't even think that it could cause this type of harm, right? And I'm pretty sure that whoever whoever is building these models, he's not thinking about that, right? They don't want the model to be generating, like bias things and racist things. That's not the goal, right. So there has been a lot of research around around this area of basically trying to break these models. And it's just sad that sometimes it happens, and you actually see it out there. But I think it's unnecessary. It's unnecessary part of the process, right. And, like, one of the things that we see is, when the car was created, there was a lot of confusion about it, right? Because while we have this machine, it's really fast, it's gonna kill people. It's not safe, it's metal. But we adapt it right. And we will adapt to this the same way. But we need to create guardrails, we need to create the seatbelts, we need to create the stop signs. So to me, I'm really interested into that space, right on understanding like, okay, what are the things that can go wrong? And how can we fix them as we go by it, and that really varies like between two I have full ownership of this model to do all the fine tuning I want in my life. Well, I don't have the budget, I can work on the prompts side of it, and that might be okay, for now.

James Dong 36:22

Awesome, at least for me, that was a really good analogy that I really liked to close on. So we're gonna go over his shifts into the small group discussions. I'm gonna go ahead and stop the recording and then share my screen. So give me a moment to do that.

Transcribed by https://otter.ai